Manifesto

These are the brightest times of the data-science era, continuously striking priceless technological advancements towards the betterment of Society. Jim Gray, winner of the prestigious Turing award, recognized data-science as the fourth paradigm of Science, together with experiments, theory and computation.

Interestingly, Scientific Modelling is still a heavily hypotheses-driven process, strongly biased by the subjective thinking of the human mind. The recent outburst of Data Science techniques opens the path to innovative modelling paradigms. Despite the complexity of the phenomena under investigation, data-driven regression procedures seek an unbiased implicit approach to our learning experience based on raw data from real observations.

The UN-BIASED project aims at developing an innovative Scientific Modelling (SM) paradigm closely entwining data-driven (DD) and hypotheses-driven (HD) techniques to potentially reduce, if not correct, possible cognitive biases concerning the Modeller’s subjective understanding of reality.

The goal is to demonstrate the proposed methodology on a complex application of great interest to the aerospace industry, namely, tilt-rotors and multi-rotor machines. These are at the cutting edge of the modern aeronautic industry. Their unique capability of combining vertical take-off and landing with a high cruise speed, comfort, and range, makes them very attractive to the short-haul regional market, with a particular reference to electric Urban Air Mobility, search and rescue, emergency medical services and service to isolated areas. Several multi-rotor configurations are presently developed for air-taxi applications. Despite the large amount of resources pledged by the industry, many challenges remain unanswered. In particular, performance predictions are hampered by the complexity of the aerodynamics of diverse flight configurations, e.g., hover and vertical-to-horizontal flight transition. In this context, the industry calls for revolutionary modelling paradigms to design machines of improved performance.

This action aims to accommodate this need by crafting an agnostic multi-fidelity modelling framework establishing a synergy between the theory-to-data and the data-to-theory perspectives to identify and possibly mitigate epistemic uncertainty in experimental and computational models for tilt-rotors aerodynamics.

State-of-the-art

Multi-fidelity modelling methods leverage the concatenation of data sets presenting enormous diversity in terms of information, size, and behaviour. Pieces of information of diverse fidelity and complexity complement each other, leading to improved estimate accuracy. In the past decades, they have been successfully employed in many applications, including aerospace design and optimisation.

Clearly, in a multi-fidelity setting, it is fundamental to establish the correct hierarchy in terms of data credibility w.r.t. the reality of interest. Unfortunately, this latter can vary significantly along a spectrum between low and high. In particular, the complexity of aerospace applications usually makes the direct estimation of data fidelity difficult, if not intractable.

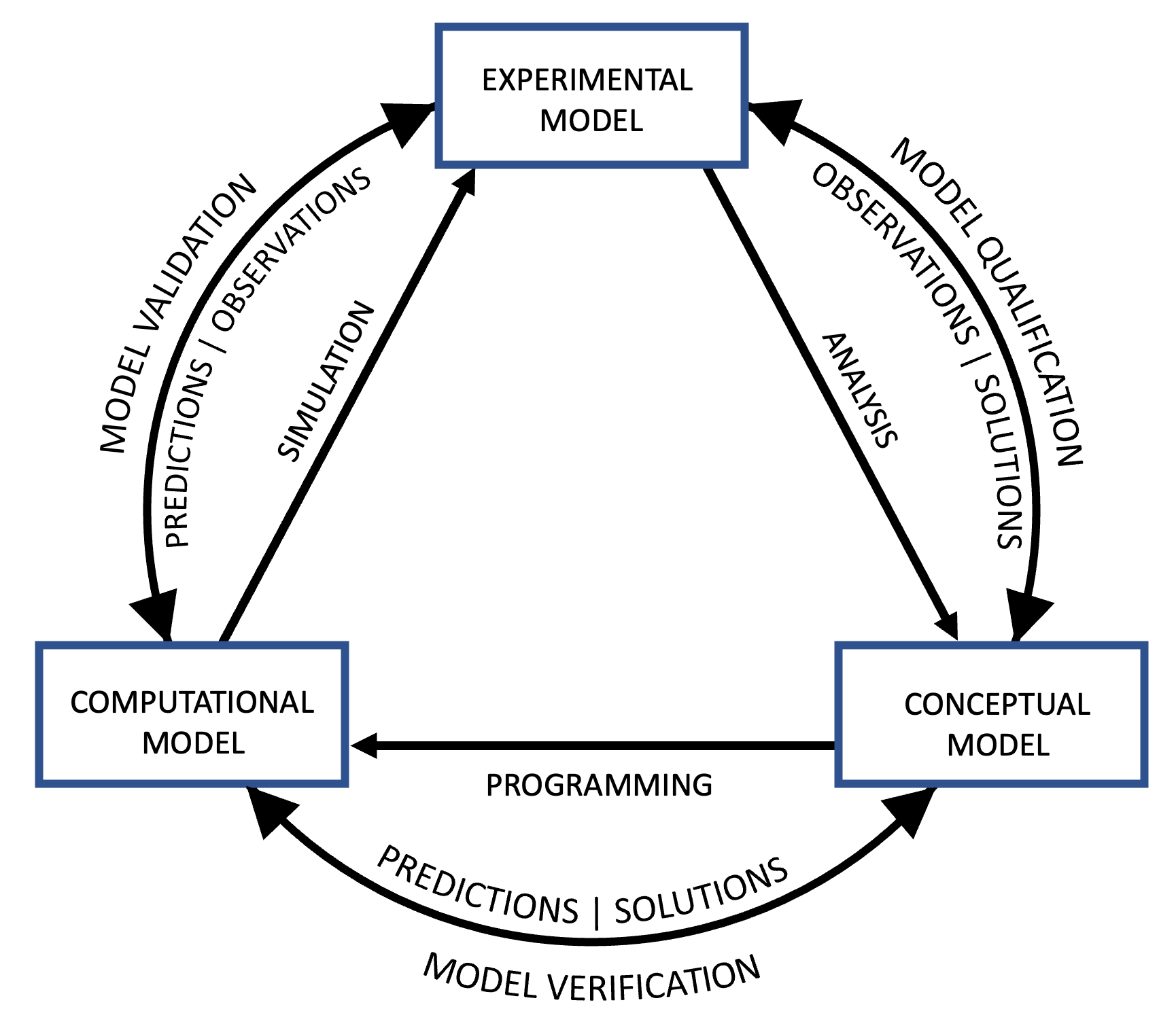

The cornerstone for establishing confidence in data from computer simulations, i.e., from HD models, is the Verification and Validation (V&V) process. In 1979, the Society for Computer Simulations (SCS) proposed a graphical representation of V&V, the so-called Sargent circle; see figure below. Since 1979, diverse interpretations have been proposed. Interestingly, V&V is always described as an iterative, deductive, clockwise process.

Sargent circle for V&V as established by SCS (Schlesinger, S. Terminology for model credibility, Simulation, Vol. 32, No. 3, 1979; 103-104)

With reference to the figure above, the conceptual model is a collection of hypotheses, theories and equations supposedly describing the reality of interest and it is formulated based on a subjective analysis. The conceptual model is then implemented (programming) in a computerised model, i.e., an operational program employed to obtain numerical predictions. To assess the credibility of computer simulations, predictions are compared against observations, considering a systematic treatment of the inherent uncertainty. Ultimately, the V&V process attempts to qualify the conceptual model by answering the following questions: Verification: Did we implement the model right? Validation: Did we implement the right model?

Models are improved through this deductive clockwise iterative process, which is carried out until an acceptable agreement is achieved between experimental data and numerical predictions. On the other hand, DD models are employed to learn the physics underlying a specific phenomenon based on raw data only, representing an inductive approach to the modelling issue. If properly trained, the algorithm is effectively a black-box computerised model (implementing an unknown physics) capable of returning predictions. Interestingly, the credibility assessment of DD models still proceeds clockwise, comparing predictions against observations, to answer the following single question:

Validation: Did we learn the model properly?

A major flaw is identified in the sole clockwise unfolding of the V&V process. That is, the whole procedure utterly relies on comparing predictions against observations. Unfortunately, observations are often relative to a simplified, partial reproduction of reality. Therefore, the credibility of predictions, either DD or HD, is strictly related to the credibility of the experiment. Generally, experiments (meant as a faithful reproduction of reality) are barely questioned. In this view, the clockwise approach makes it difficult to establish a fidelity hierarchy among DD and HD predictions. Since the deductive SM approach is strongly hypotheses-driven and inherently biased, modelling errors due to strong a priori beliefs are difficult to detect if the experiments themselves are invalid.

Typically, experiments are used to validate CFD simulations, to ultimately seek confirmation that numerics accurately predicts reality. Instead, experiments should be questioned and fully understood to avoid a widespread biased perspective with possibly dramatic consequences for the aircraft design process.

Given the many examples found in the literature, there is a strong need for developing new methodologies capable of improving the Modeller’s understanding of reality, to mitigate the potential epistemic uncertainty affecting both the experimental and the conceptual models. Commonly, DD models are employed as black boxes to obtain mere predictions and little credit is given to the possibility of translating the learned patterns into interpretable theories and to the possibility of identifying epistemic uncertainty. Interestingly, leveraging the wealth of scientific knowledge may help to improve the effectiveness of DD models in enabling scientific discovery, for practically inferring the physics of a system within an inductive reasoning perspective and thus limiting the role of the Modeller’s prior belief.

Objectives and key innovations

This project aims to establish a novel multi-fidelity SM paradigm to systematically combine the deductive and the inductive logical reasoning approach to mitigate the consequences of subjective modelling choices and improve the Modeler’s understanding of a determined reality of interest. The goal is to demonstrate the methodology in aerospace applications, specifically focusing on improving the understanding of the aerodynamics of tilt-rotor machines. Several vital innovations need to be introduced within this new paradigm.

Overall methodology

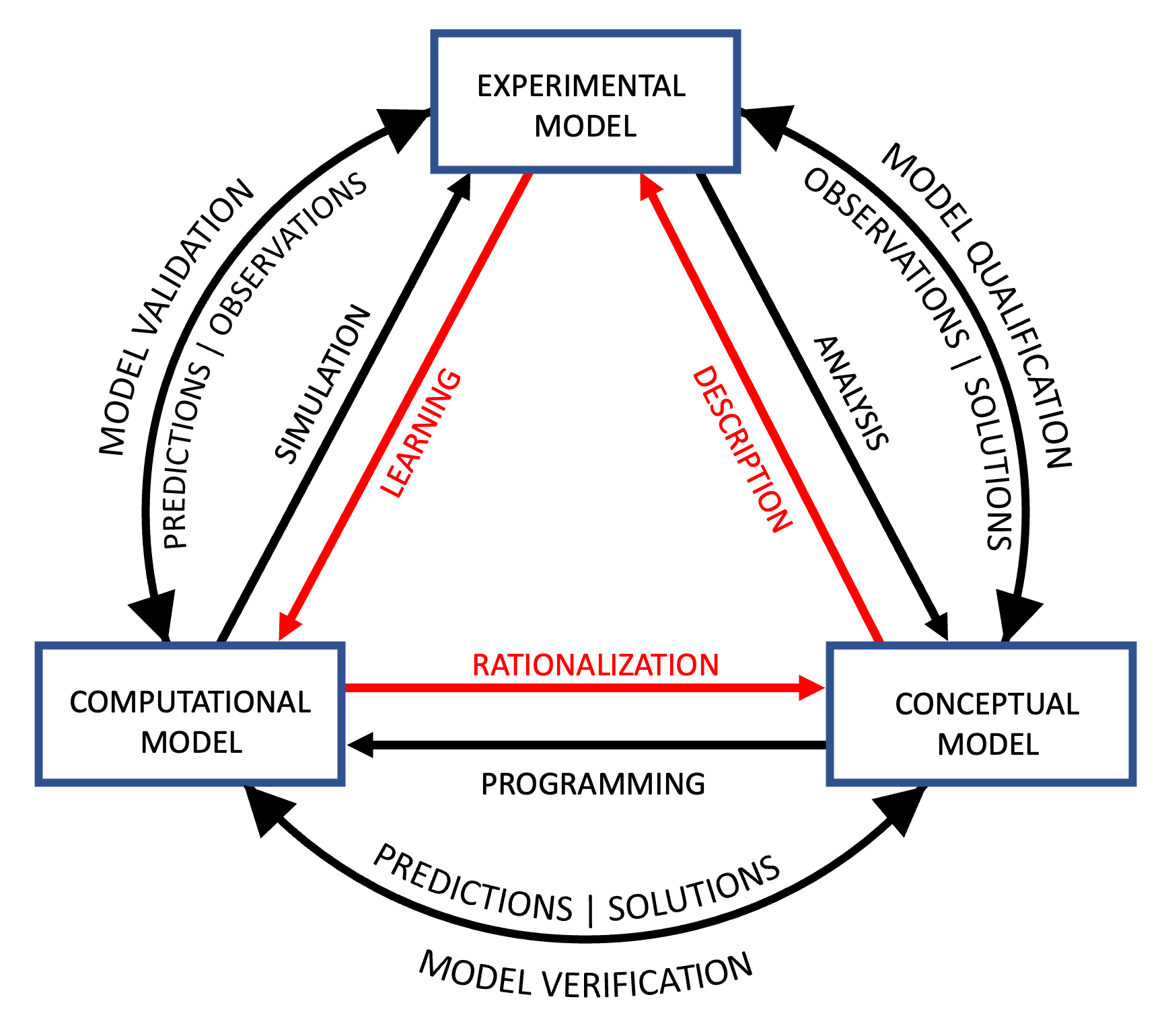

The UNBIASED project proposes to invert the perspective on the V&V loop, switching from a theory-to-data to a data-to-theory approach, i.e., from the clockwise to the anti-clockwise direction, formalising a novel framework for validating models and for improving our understanding of reality. This is not intended as a substitution of the classical V&V paradigm but rather as an extension, achieved by integrating a data-to-theory plausibility assessment.

Extended Sargent circle. The classical process (clockwise, black) is complemented by an inversed process relying and focusing on DD models (anti-clockwise, red)

With reference to the Figure above, the UN-BIASED project proposes to build DD models that are able to mimic the behaviour of the system under investigation i.e., tilt-rotors wind tunnel tests, with a reasonable level of accuracy (learning). Afterwards, the idea is to take advantage of trained models to infer the physics underlying the experiment (rationalization process). Indeed, the learning phase involves the selection of the functional form of the DD model and of its parameters and hyperparameters. Being a multitude of choices available, by analyzing the structure of the DD model providing the best fit, it is possible to achieve a few interesting results e.g., obtain a valuable interpretation of the properties of the data (i.e., the experiment). In other words, the goal of the rationalization process is to justify and explain DD model predictions in a seemingly rational or logical manner, to make the inherent physics model acceptable by plausible means.

This is the action opposite to implementing (programming) a conceptual model. Namely, it corresponds to establishing the conceptual model underlying the physics learnt by the algorithm in an inductive logic reasoning framework. This can be achieved through an a posteriori analysis of the DD model enforcing the fulfilment of specific physical constraints during the training phase. Indeed, without the guidance of theoretical knowledge, several structures of similar accuracy and increasing complexity may be reasonable for one DD model. Since we provide a means to enforce plausibility constraints stemming from fundamental principles, we guide the learning towards physically consistent solutions by assuming that the performance of a DD model is given by

𝑃𝑒𝑟𝑓𝑜𝑟𝑚𝑎𝑛𝑐𝑒 ∝ 𝐴𝑐𝑐𝑢𝑟𝑎𝑐𝑦 + 𝑆𝑖𝑚𝑝𝑙𝑖𝑐𝑖𝑡𝑦 + Ph𝑦𝑠𝑖𝑐𝑠 𝑝𝑙𝑎𝑢𝑠𝑎𝑏𝑖𝑙𝑖𝑡𝑦.

In the proposed setting, the anti-clockwise verification step would check whether a conceptual model developed in the rationalisation process is coherent with the Modeler’s fundamental assumptions. Whenever a rationalised DD model fails a premise, that indicates a biased Modeler’s understanding of the experiment. The goal is to expose differences among the inferred physics laws and the Modeler’s subjective belief, thus providing a way to identify epistemic uncertainty. In the inductive perspective, the V&V corresponds to answering the following two questions:

Validation: Did we learn the model properly? Verification: Did we learn the proper model?

The first question is inherited from the classical approach, but it now assumes additional significance since the answer now provides feedback for inferring the physics of the experiment. The second one deals with assessing whether the inferred physics is coherent with the Modeler’s understanding of it in terms of assumptions and hypotheses. This highlights biases that can be corrected by modifying the conceptual model (or the experiment, depending on the reality of interest). At the same time, the comparison of DD/HD predictions quantifies the epistemic uncertainty. Ultimately, the proposed dual V&V approach could reduce the subjectivity brought by the Modeler to the modelling process.